Select Sidearea

Populate the sidearea with useful widgets. It’s simple to add images, categories, latest post, social media icon links, tag clouds, and more.

hello@youremail.com

+1234567890

+1234567890

Populate the sidearea with useful widgets. It’s simple to add images, categories, latest post, social media icon links, tag clouds, and more.

Iztok Franko

This is not another article about airline experimentation. If you read our blog, you know we’ve done plenty of these. This is an article (and podcast) about how to build better airline digital products. And the most important part of building better digital products is to make faster and better decisions.

Before we get into how you can do that, let me tell you something else. These Diggintravel Podcast talks are primarily a medium to let talented airline digital leaders share their ideas. We want airline digital professionals to learn from their peers. But if you want to become a better digital expert, I don’t think that’s enough. You have to look outside our industry as well.

Our Airline Digital Academy students could tell you that I’m a big fan of David Epstein and his book Range. In the book, Epstein explains that if you want to solve complex problems, you need to have a broad skillset and look for solutions outside your area (or industry) of expertise. Here’s an excerpt from Epstein’s newsletter, which explains how one of the world’s best-known immunologists tries to look outside the box for solutions every day:

“Arturo Casadevall, a Johns Hopkins professor and one of the most influential immunologists in the world, once shared with me the advice he gives every colleague: “Read something outside your field every day.” Their typical response? “‘Well, I don’t have time to read outside my field’,” he recounted. “I say, ‘No, you do have time, it’s far more important.’ Your world becomes a bigger world, and maybe there’s a moment in which you make connections.”

I hope our podcast can help you look beyond the airline industry to become a better digital professional. In past episodes, you had the opportunity to learn from innovation and experimentation leader Stefan Thomke, from Harvard Business School; we also talked to Ronny Kohavi (who managed experimentation at Airbnb, Microsoft, and Amazon) and Lukas Vermeer (former Head of Experimentation at Booking.com).

Today, we bring you another great digital expert – Ben Labay, Managing Director at Speero. Speero is a digital optimization agency built by the people who founded the CXL blog, one of the best resources available for conversion optimization and experimentation. Ben is an industry leader, a speaker on all things digital research and experimentation, and a conservation science consultant.

Listen to the new episode of the Diggintravel Podcast about how to leverage experimentation to build better airline digital products via the audio player below, or read on for key highlights from our talk with Ben:

And don’t forget to subscribe to the Diggintravel Podcast in your preferred podcast app to stay on top of airline digital product, analytics, innovation, and other trends!

The first thing I wanted to talk to Ben about is why experimentation matters in the first place. In our airline industry, for some people, experimentation and conversion rate optimization (CRO) are still very niche; they’re still somewhere in the basement. Some airline digital enthusiasts do it, but it’s much harder to get broader business exposure. So, my first question was: how can we explain the value and the benefits of experimentation to marketing and digital people who are not as familiar with it?

You’re right – in a lot of senses, [CRO/experimentation] is a bit of a niche area. I think CRO is its own niche and experimentation also is its own niche. CRO is more on the marketing side; experimentation is more popular as a category especially in the tech/SaaS world and within product, not necessarily having its origins on the marketing side of the fence.

When I have conversations around how this applies more broadly to marketers or how this can transition out of the niche realm and into the more general zeitgeist of business operations, experimentation/CRO is all about decision optimization. It’s a tool used to have faster, more accurate decisions. The goal is not growth. The goal is adaptability. As the market changes, as the environment changes, how can we position ourselves to take advantage or be positioned and not be stuck flatfooted, considering the market and environmental changes?

When I talked to the aforementioned Stefan Thomke, he said experimentation enables a scientific approach to decision-making. The concept Ben is talking about here is very similar: experimentation as a tool to make faster and more accurate decisions when it comes to your airline’s digital product. Ben mentioned one other thing that I see many airlines struggle with – namely, a long decision-making process because most airlines are very hierarchical organizations. The best digital companies are the opposite; they leverage experimentation to empower digital product owners to make decisions on their own, which speeds up decision-making.

Experimentation can be and should be looked at as a tool for creating decision optimization and also for culturally pushing decisions down the organizational ladder, pushing it down into product owners as opposed to decisions being stuck in the top and stuck in the C-suite and stuck in leadership. It’s a tool that leadership is starting to embrace more and more as an operating model to comfortably push decision-making, and strategic decision-making, even, down into product owners.

Another important point about why experimentation really matters is that it allows you to better understand the root cause of your (or better yet, your customers’) problems. It’s a tool for understanding the key friction points in your customer journey process. Looking at the analytics and data is a good start, but as Ben points out, it’s not enough:

Experimentation is an act of intervention. The type of data that it’s creating is categorically different than what you get in analytics. The analytics data is all about the past. Supervised / unsupervised machine learning, Big Data, it’s all about past data. But in new environments, applying that data in a predictive model is very dangerous, and it’s prone to errors and confirmation bias and all sorts of big issues.

So experimentation is different than data that you see, which is analytics. It’s data that you do. You’re actively intervening. You’re changing something, and then you’re measuring based on that change. It’s a new type of paradigm. Categorically, it’s up on the causal ladder. You’re able to not only look at correlation; you’re able to get closer to causation. That’s why it’s not just data-driven and transparent frameworks.

Going beyond data and analytics to understand the root cause is still not enough. The goal should be to make the decision and change things based on the learnings. But Ben went even further when talking about the end goal:

You mentioned the goal is not to test; the goal is to make a decision. The goal is not even to make a decision. I love the mental model of “It’s not enough to know.” We can know exactly what the problem is and what to change, and we can make the change – we can actually make the change on the website, we can improve it, but that’s still only half the battle. You still need to communicate that learning and that change to the whole org.

Even implementing a change and even harvesting the gains, getting the revenue, is still half the battle if you’re not getting that learning to your whole team to where you’re able to stand on top of that learning and reach higher.

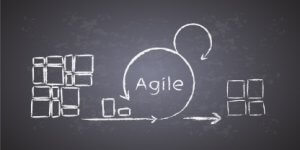

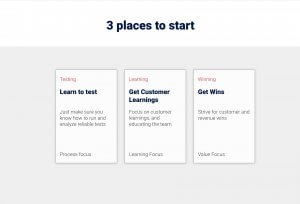

I’m often asked by our readers and podcast listeners about how to start an airline experimentation program. Ben has experience in working with many different companies, and he identified three personas regarding how to start a testing and experimentation program.

There’s three personas of how people start. There’s a revenue persona, a “win” persona. “I want to win now.” They want to come in and start testing what they’ve seen work in some other areas. And this is okay. This is good to start.

They come in and they start testing some things. But what happens is it’ll quickly hit a ceiling, and it can potentially get perverse really quickly. There are agencies, for example, that work on performance models for charging their clients, like “If the test wins, we get a chunk of that.” We get a lot of clients from these agencies, by the way. [laughs] After they realize how much of a dumpster fire that model is and how many lawyers and red tape it involves and how it doesn’t incentivize creative thought. Money is really good at incentivizing root machine process type of work. “I pay you money to do factory work.” Money is not a good incentivization tool to get creative work and get inspired and get innovation. We need other tools for that.

Source: Ben Labay

The next focus area Ben sees in experimentation programs is the learning focus.

The other persona is around customer learnings, which is the one I really love. If you go into these business operational framework books, like OKR theory or Get Things Done, there’s only three goal categories for businesses. It’s revenue, customer, and process. All of them talk about all this crazy research they’ve done with companies, and they all say that customer goals are really where you should aim your company. You should have an aspiring mission for your company; it should be centered around the customer. Then everything trickles from there. Your process and your revenue is derivative.

I like this persona a lot. This is what I call customer experience optimization. They come in starting like, “We’re doing user testing. We’re doing polls. Let’s also use experimentation as a tool to get customer learning.” So that’s also a good place to start, just thinking about the customer.

The last and third focus area Ben talked about was the testing or process focus.

The third one, and I’m seeing more and more of this, is what I call experimentation. Experimentation is the moniker that I give that describes the process of intervening in measurement, the process of decision optimization. I give that moniker experimentation, experimentation programs. It involves not only A/B testing, but the decision frameworks, the preregistering, the post mortems. It involves the planning and the road-mapping before the A/B testing. It involves the assessment and integration of product strategy into metric strategy before the planning and road-mapping. So it involves all of those process stages.

We see a lot of bigger companies – MongoDB was a good example, Miro.com. We work with these companies, and they’ll come in and be like, “We’re spaghetti testing.” That’s where they started. They started on one of those two other personas. Let’s stop spaghetti testing and let’s set up a program.

Ben shared tons of other great insights in our podcast talk. Check out the full podcast interview if you’re interested in:

If you want to learn from leaders like Ben about airline digital optimization or want to be the first to know when our next Airline Digital Talk will be published, please:

I am passionate about digital marketing and ecommerce, with more than 10 years of experience as a CMO and CIO in travel and multinational companies. I work as a strategic digital marketing and ecommerce consultant for global online travel brands. Constant learning is my main motivation, and this is why I launched Diggintravel.com, a content platform for travel digital marketers to obtain and share knowledge. If you want to learn or work with me check our Academy (learning with me) and Services (working with me) pages in the main menu of our website.

Download PDF with insights from 55 airline surveyed airlines.

Thanks! You will receive email with the PDF link shortly. If you are a Gmail user please check Promotions tab if email is not delivered to your Primary.

Seems like something went wrong. Please, try again or contact us.

No Comments